This material is not a textbook or a review; it cannot replace either of these. We shall simply draw the attention of the user to a few points which seem to us as particularly relevant from a practical simulation modeling point of view. Although this material is by no means trying to address philosophical issues, we shall nevertheless point at a few epistemological questions and leave them for further thoughts.

Model evaluation

Model evaluation comes first. Entire excellent books are devoted to this subject, which again, this short text cannot have the ambition to replace. Shannon (1975) posed two questions on interpretation and validation of models:

- what is meant by establishing validity?, and

- what criteria should be used?

In his PhD dissertation and a seminal article on model evaluation, Teng (1981) indicated three views that can be taken about validation:

- The rationalist view: "Rationalism holds that a model is simply a system of logical deductions made from a set of premises of unquestionable truth, which may or may not themselves be subject to empirical or objective testing. In its strictest sense, the premises are what Immanuel Kant termed synthetic

a priori premises."

- The empiricist view: "The empiricist holds that if any of the postulates or assumptions used in a model cannot be independently verified by experiment, or analysis of experimental data, then the model cannot be considered valid. In its strictest sense, empiricism states that models should be developed only using proven or verifiable facts, not assumptions."

- The positivist view: "The positivist states that a model is valid only if it is capable of accurate predictions, regardless of its internal structure or underlying logic. Positivism, therefore, shifts the emphasis away from model building to model utility."

Modeling of biological systems borrows elements from the three points of view, with varying degrees, depending on the modeling objectives. It borrows elements from the rationalist view, because we try our best to incorporate elements of reality; we are well aware, however, that the system and the corresponding model, regardless of their complexity, are only simplification of reality. It borrows elements of the empiricist view, as we try to incorporate as much experimentally measured data (as parameters or response functions) as we can; yet determining a set of genuine assumptions can be very hard, especially when one considers the stochasticity of nature; and testing the various components of a system may not necessarily yield an evaluation of an entire system, where these components interact. Lastly, it borrows elements from the positivist view, as the aim of model development is to serve a purpose; a question, however, remains as to whether any model would be acceptable, based on its sole performances, and regardless of the scientific value of its logic.

"Model evaluation" and "model validation" are often used interchangeably. The expressions however do not address the same objectives or processes (Thornley and France, 2007):

- Model evaluation is used to include all methods of critiquing a model, whereas

- Model validation is the demonstration that, within a specified domain of application, a model yields acceptable predictive accuracy over that domain.

As a consequence, one has to recognize again that, being derived from an

a priori view of reality, system simulation models always are incomplete, and thus model evaluation can only be incomplete as well. On the other hand, validity is not a property of a model alone, but also of the observations against which it is being tested. Thus, validation involves the use of actual data (and their inherent errors), in actual circumstances (experiments in specific set-ups, in specific situations). The perception of simulation models as "gigantic regression equations" (Thornley and France, 2007) is misguided; yet it is not surprising that efforts are still invested in developing simulation models and attempting conclusive validation. These contrasting points of views are actually linked to the objective and evaluation of models, which are briefly outlined below.

For now, borrowing from Teng (1981), Rabbinge et al. (1983), and Thornley and France (2007), one can suggest the following steps for model evaluation:

- model verification: checking that the programming structure and the computations are performed as expected;

- visual assessment of the outputs of the model and its behavior: assessing whether the models' output conform with the expected overall behavior of a system;

- quantitative assessments of the model's outputs against numerical observations, with a large number of procedures. A starting point is to consider that common statistical testing aims at rejecting the hypothesis H0 that the distributions of observed and simulated values are identical. Testing H0 is opposite, however, to the issue of validation, where one wants to establish the sameness of outputs and observations. H1 is the hypothesis that the two distributions are different. The error of rejecting, wrongly, H1 is the main concern in model evaluation, and entails a number of approaches, for which Thornley and France (2007) provide a starting point.

Evolution of models

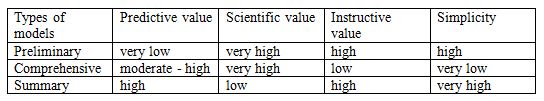

Modeling is an ongoing process, as new knowledge is made available, and model testing is undertaken. Penning de Vries (1982) distinguished three development phases, with:

- Preliminary models, enabling the communication, quantification, and evaluation of hypotheses. These models are developed at the frontier of knowledge, and are generally short lived. However, such models can be highly useful for the scientist in guiding research.

- Comprehensive models are built upon the former ones, as a result of knowledge accumulation. Comprehensive models are meant to be explanatory, because their structure, their parameters, and their driving functions are derived from actual experimental work and observations. Such models, however, are often large and intricate, making their communication difficult.

- Summary models are a necessary outcome of comprehensive models; they synthesize what appears to be their most important components. As stated by Penning de Vries (1982), "the" summary model of a comprehensive model does not exist: this depends on the depth and objective aimed at.

Table 10.1 summarizes the properties of these three types of models. One may note that the evaluation process and its ambitions will depend on the type of model.

Table 10.1. Relative values of models in different phases of development (From Penning de Vries, 1982)

Use of simulation models

A key value of simulation models is their heuristic value: they enable the mapping of processes that are assumed to take place in a given system. Success here depends on a proper choice of system's limits and state variables, which also implies deciding which variables will be external to, i.e., independent from, the system, and be driving variables. There is a large flexibility in laying out links (as flows, or as numerical connections), or erasing them. One may see this as the sketching phase of a painter preparing her/his work. This stage, of course, is essential. This is where independence of thought and creativity lies. From it, too, will depend all the 'true' modeling work.

Laying out hypotheses is in part related to the previous stage. It involves more, however, because such hypotheses will be derived from the modeler's experience, or experiments. "No one can be a good observer unless he is a good theorizer" (Charles Darwin, quoted from Zadoks, 1972). We believe the reverse to be true too, and this is why modeling needs to combine both conceptual and actual experimental work. In doing so, the development of a (preliminary) model helps in guiding research: questions are asked, knowledge gaps are identified, and thus experiments for a purpose are designed. Modeling goes hand in hand with experimental work.

When enough hypotheses have been assembled, a next stage may consist in conducting verifications and preliminary scenario analyses: is the model behaving as one might expect, or does it go astray? In the latter case, one needs to re-consider the previous steps. An important effort at this stage is often to be considered: epidemiological experts often tend to look at a large number of details, and simplification is needed. This may entail the need to ponder the time constant of the processes involved and ensure that two levels of integration (in epidemiology: an epidemic and its underlying processes; in crop loss modeling: yield build-up and its determining, limiting, and reducing factors) are considered.

When one is satisfied, overall, with the model behavior, an evaluation phase is needed, as discussed above. This enables one of the most interesting outcomes of modeling, i.e., scenario analysis. Simulation modeling enables projections in possible futures. These futures may be materialized by the driving functions (quite a few plant pathologists are involved in climate change research, for instance; Garrett, 2010) or by the parameter values.

Another use of models is to conduct simulated experiments. Luo and Zeng (1995), for instance, provide a fine example of such work on components of partial resistance to yellow rust of wheat, with strong links with experimental work.

Model simplification then can lead to important outcomes from the practical standpoint. One, of course, is disease management, for which quite a number of models have been developed. One of many examples is SIMCAST (Fry et al., 1983; Grünwald et al., 2000) for potato late blight. Again, simulation modeling can become a very powerful scoping approach. Combining this potato late blight management model with a GIS, Hijmans et al. (2000) paved the way towards global geophytopathology and risk assessment. This major outcome of decades of research is one example, which should encourage plant pathologists and biologists, in general, to engage into a field which, we believe, is still full of promises.

References

Fry, W. E., Apple, A. E., and Bruhn, J. A. 1983. Ecaluation of potato late blight forecasts modified to incorporate host resistance and fungicide weathering. Phytopathology 73:1054-1059.

Garrett, K. A., Forbes, G. A., Savary, S., Skelsey, P., Sparks, A. H., Valdivia, C., van Bruggen, A. H. C., Willocquet, L., Djurle, A., Duveiller, E., Eckersten, H., Pande, S., Vera Cruz, C., and Yuen, J. 2010. Complexity in climate change impacts: a framework for analysis of effects mediated by plant disease. Plant Pathol. 60:15-30.

Grünwald, N. J., Rubio-Covarrubias, O. A., and Fry, W. E. 2000. Potato late-blight management in the Toluca Valley: forecasts and resistant cultivars. Plant Dis. 84:410-416.

Hijmans, R. J., Forbes, G., and Walker, T. S. 2000. Estimating the global severity of potato late blight with GIS-linked disease forecast models. Plant Pathol. 49:697-705.

Luo, Y., and Zeng., S. M. 1995. Simulation studies on epidemics of wheat stripe rust (Puccinia striiformis) on slow rusting cultivars and analysis of effects of resistance components. Plant Pathol. 44:340-349

Penning De Vries, F. W. T. 1982. Phases of development of models. Pages 21-25 in: Simulation of Plant Growth and Crop Production. F.W.T. Penning de Vries and H. H. Van Laar, , eds. Pudoc, Wageningen.

Shannon, R. E. 1975. Systems Simulation: The Art and Science. Prentice Hall, NJ.

Teng, P. S. 1981. Validation of computer models of plant disease epidemics: a review of philosophy and methodology. Z. Pflanzenkrank. Pflanzensch. 88:49-63.

Thornley, J. H. M., and France, J. 2007. Mathematical Models in Agriculture. Quantitative Methods for the Plant, Animal and Ecological Sciences, 2nd ed. CABI, Wallingford.

Zadoks, J. C. 1972. Methodology in epidemiological research. Annu. Rev. Phytopathol. 10:253-276.